I think something might be wrong with your Neovim if it aggregated 70 gigs of log files.

Linux

From Wikipedia, the free encyclopedia

Linux is a family of open source Unix-like operating systems based on the Linux kernel, an operating system kernel first released on September 17, 1991 by Linus Torvalds. Linux is typically packaged in a Linux distribution (or distro for short).

Distributions include the Linux kernel and supporting system software and libraries, many of which are provided by the GNU Project. Many Linux distributions use the word "Linux" in their name, but the Free Software Foundation uses the name GNU/Linux to emphasize the importance of GNU software, causing some controversy.

Rules

- Posts must be relevant to operating systems running the Linux kernel. GNU/Linux or otherwise.

- No misinformation

- No NSFW content

- No hate speech, bigotry, etc

Related Communities

Community icon by Alpár-Etele Méder, licensed under CC BY 3.0

don't worry, they've just been using neovim for 700 years, it'll be alright

Sure, that's also a possibility. I'd be interested in their time machine though.

So I found out that qbittorrent generates errors in a log whenever it tries to write to a disk that is full...

Everytime my disk was full I would clear out some old torrents, then all the pending log entries would write and the disk would be full again. The log was well over 50gb by the time I figured out that i'm an idiot. Hooray for having dedicated machines.

That's not entirely your fault; that's pathological on the part of the program.

I once did something even dumber. When I was new to Linux and the CLI, I added a recursive line to my shell config that would add it self to the shell config. So I pretty much had exponential growth of my shell config and my shell would take ~20 seconds to start up before I found the broken code snippet.

ncdu is the best utility for this type of thing. I use it all the time.

I install ncdu on any machine I set up, because installing it when it's needed may be tricky

Came in expecting a story of tragedy, congrats. 🎉

But did he even look at the log file? They don't get that big when things are running properly, so it was probably warning him about something. Like "Warning: Whatever you do, don't delete this file. It contains the protocol conversion you will need to interface with the alien computers to prevent their takeover."

PTSD from the days long ago when X11 error log would fill up the disk when certain applications were used.

Try ncdu as well. No instructions needed, just run ncdu /path/to/your/directory.

If you want to scan without crossing partitions, run with -x

MINI GUIDE TO FREEING UP DISK SPACE (by a datahoarder idiot who runs on 5 gigs free space on 4 TB)

You will find more trash with the combination of 4 tools. Czkawka (duplicates and big files), Dupeguru (logs), VideoDuplicateFinder by 0x90d, and tune2fs.

VDF finds duplicates by multiple frames of a video, and with reversing frames, and you can set similarity % rate and duration of videos. It is the best tool of its kind with nothing to match it, and uses ffmpeg as backend.

There is a certain amount of disk space reserved on partitions for root or privileged processes, but users who create /home partition separately do not need this reserved space there. 5% space is reserved by default, no matter if your disk is 1 TB, 2 TB or 4 TB. To change this, use command sudo tune2fs -m N (where N is % you want to reserve, can be put to 0% for /home, but NEVER touch root, swap or others, use GParted to check which is which partition).

Regular junk cleaning on Linux can be done with BleachBit. Wipe free disk space once in 3-6 months atleast.

On Windows, use PrivaZer instead of BleachBit.

Since all of these are GUI tools (except tune2fs which requires no commandline hackerman knowledge), this guide is targeted towards tech literacy level of users who can atleast replace crack EXEs in pirated games on Windows.

I usually use something like du -sh * | sort -hr | less, so you don't need to install anything on your machine.

Same, but when it's real bad sort fails 😅 for some reason my root is always hitting 100%

I usually go for du -hx | sort -h and rely on my terminal scroll back.

dust does more than what this script does, its a whole new tool. I find dust more human readable by default.

Maybe, but I need it one time per year or so. It is not a task for which I want to install a separate tool.

Almost the same here. Well, du -shc *|sort -hr

I admin around three hundred linux servers and this is one of my most common tasks - although I use -shc as I like the total too, and don't bother with less as it's only the biggest files and dirs that I'm interested in and they show up last, so no need to scrollback.

When managing a lot of servers, the storage requirements when installing extra software is never trivial. (Although our storage does do very clever compression and it might recognise the duplication of the file even across many vm filesystems, I'm never quite sure that works as advertised on small files)

I admin around three hundred linux servers

What do you use for management? Ansible? Puppet? Chef? Something else entirely?

Main tool is Uyuni, but we use Ansible and AWX for building new vms, and adhoc ansible for some changes.

I'd say head -n25 instead of less since the offending files are probably near the top anyway

So like filelight?

I really like ncdu

Yeah I got turned onto ncdu recently and I’ve been installing it on every vm I work on now

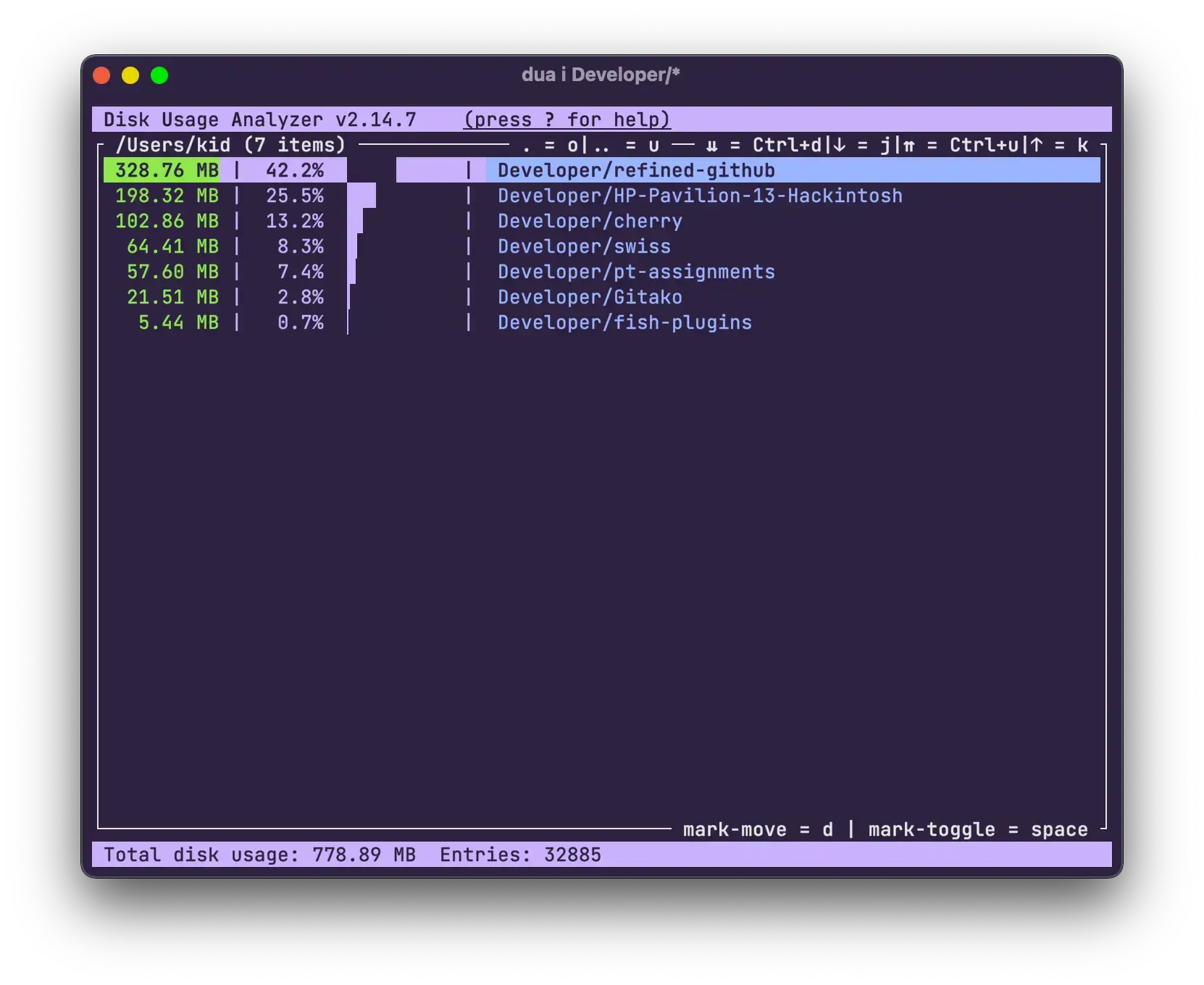

check out dua. I usually use it in interactive most which lets you navigate through the file system with visual representations of total dir/file size.

Here is a screenshot randomly found from the github issues

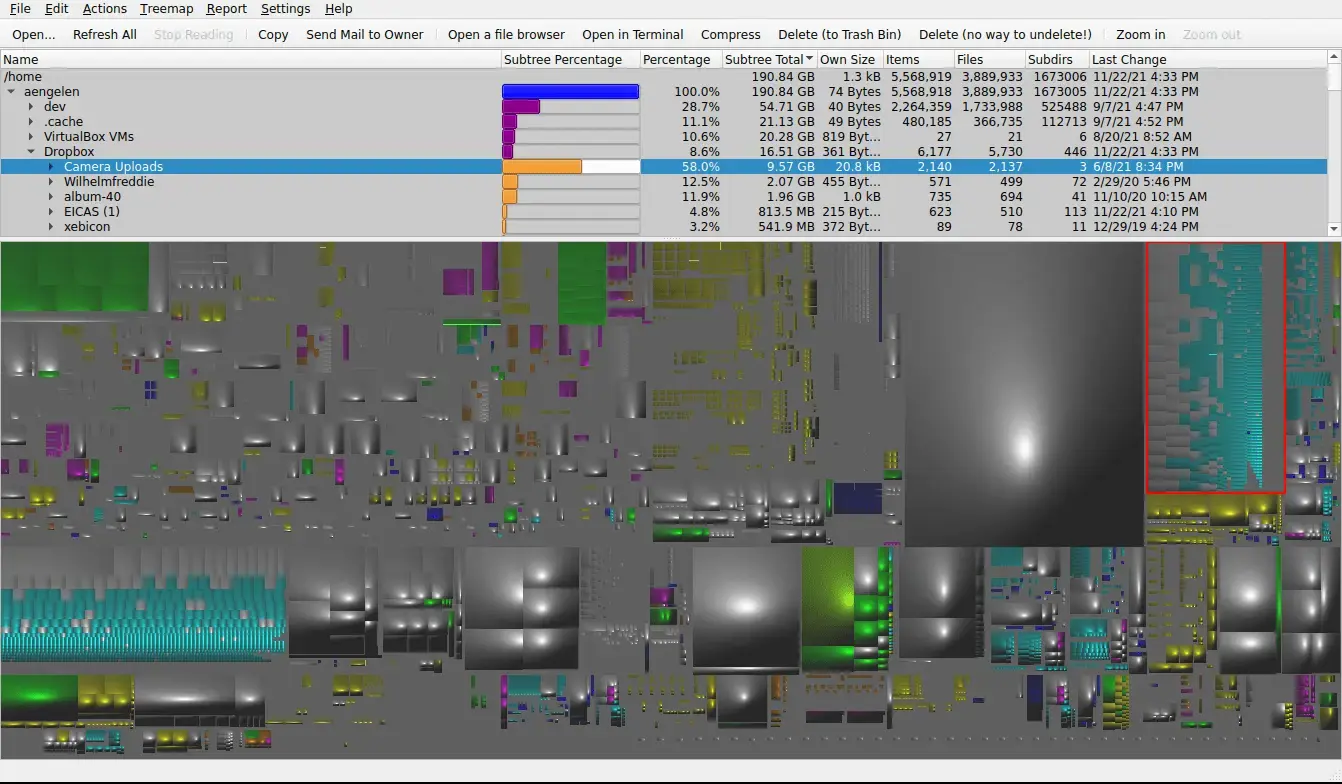

I also recently found this gui program called k4dirstat buried in the repos. There are a few more modern options but this one blows them all out of the park.

Screenshot from the github repo:

Too bad they used such an ugly configuration for the screenshot.. It allows you to modify the visualization to look better and display information differently. Anyway just thought I'd share as the project is old and little known.

thanks for sharing a screenshot of ncdu, should help others discover it

for the visualization itself IMHO Disk Usage Analyzer gives aesthetically pleasing results, not a fan of the UX but it works well enough to identify efficiently large files or directories

A 70gb log file?? Am I misunderstanding something or wouldn't that be hundreds of millions of lines

I've definitely had to handle 30gb plain text files before so I am inclined to believe twice as much should be just as possible

You guys aren't using du -sh ./{dir1,dir2} | sort -nh | head?

Maybe other tools support this too but one thing I like about xdiskusage is that you can pipe regular du output into it. That means that I can run du on some remote host that doesn't have anything fancy installed, scp it back to my desktop and analyze it there. I can also pre-process the du output before feeding it into xdiskusage.

I also often work with textual du output directly, just sorting it by size is very often all I need to see.

I miss WinDirStat for seeing where all my hard drive space went. You can spot enormous files and folders full of ISOs at a glance.

For bit-for-bit duplicates (thanks, modern DownThemAll), use fdupes.

Qdirstat? https://github.com/shundhammer/qdirstat Filelight is also really good https://apps.kde.org/filelight/

Qdirstat will not size its damn rectangles properly in Mint. Massive empty voids for no discernible reason.

Filelight is just objectively worse than a grid-based overview.

If WizTree is available on Linux then I highly recommend it over all other alternatives.

It reads straight from the table and is done within a couple of seconds.

Filelight on linux

Squirreldisk on windows

Both libre

I use gdu and never had any issues like that with it

Yeah, it helped me unblock my server where I ran out of space