They run all of Gamepass as well as all of Sony's PS+ on Azure, I think they'll be fine.

danielbln

It's so, so, so much better. GenAI is actually useful, crypto is gambling pretending to be a solution in search of a problem.

In fact, the original script of The Matrix had the machines harvest humans to be used as ultra efficient compute nodes. Executive meddling led to the dumb battery idea .

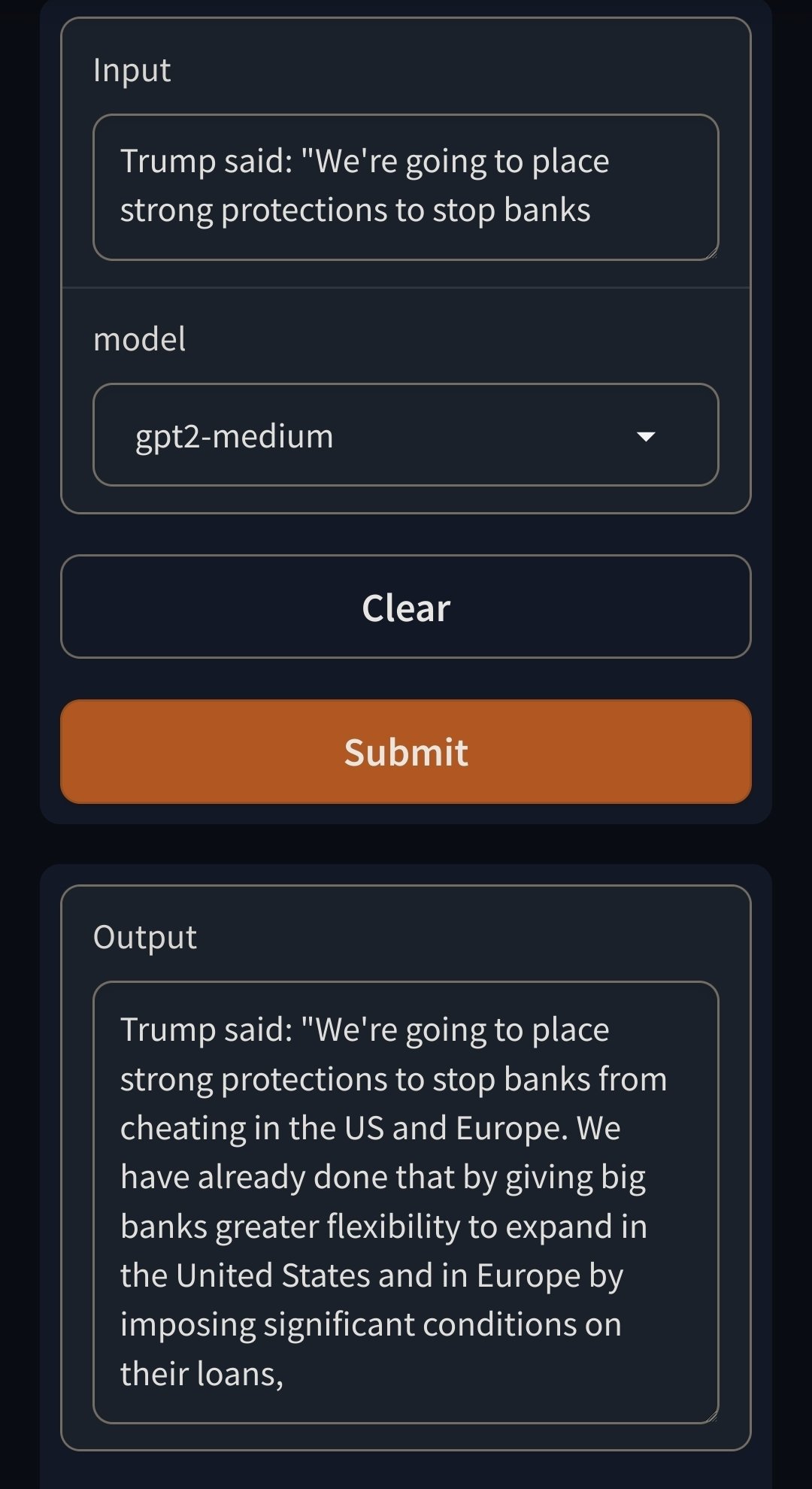

GPT-2 is more coherent than that. Hell, a Markov chain would produce more coherent output than Trump.

I'm gonna give the point to GPT-2 here in terms of coherence and eloquence.

Gotta get through that diaper first.

Eh, that's not quite true. There is a general alignment tax, meaning aligning the LLM during RLHF lobotomizes it some, but we're talking about usecase specific bots, e.g. for customer support for specific properties/brands/websites. In those cases, locking them down to specific conversations and topics still gives them a lot of leeway, and their understanding of what the user wants and the ways it can respond are still very good.

Depends on the model/provider. If you're running this in Azure you can use their content filtering which includes jailbreak and prompt exfiltration protection. Otherwise you can strap some heuristics in front or utilize a smaller specialized model that looks at the incoming prompts.

With stronger models like GPT4 that will adhere to every instruction of the system prompt you can harden it pretty well with instructions alone, GPT3.5 not so much.

I've implemented a few of these and that's about the most lazy implementation possible. That system prompt must be 4 words and a crayon drawing. No jailbreak protection, no conversation alignment, no blocking of conversation atypical requests? Amateur hour, but I bet someone got paid.

MRGA!!

three months later

Yep, I'll get to it.