this post was submitted on 02 Aug 2024

1512 points (98.4% liked)

Science Memes

10348 readers

1675 users here now

Welcome to c/science_memes @ Mander.xyz!

A place for majestic STEMLORD peacocking, as well as memes about the realities of working in a lab.

Rules

- Don't throw mud. Behave like an intellectual and remember the human.

- Keep it rooted (on topic).

- No spam.

- Infographics welcome, get schooled.

Research Committee

Other Mander Communities

Science and Research

Biology and Life Sciences

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- !reptiles and [email protected]

Physical Sciences

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

Humanities and Social Sciences

Practical and Applied Sciences

- !exercise-and [email protected]

- [email protected]

- !self [email protected]

- [email protected]

- [email protected]

- [email protected]

Memes

Miscellaneous

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

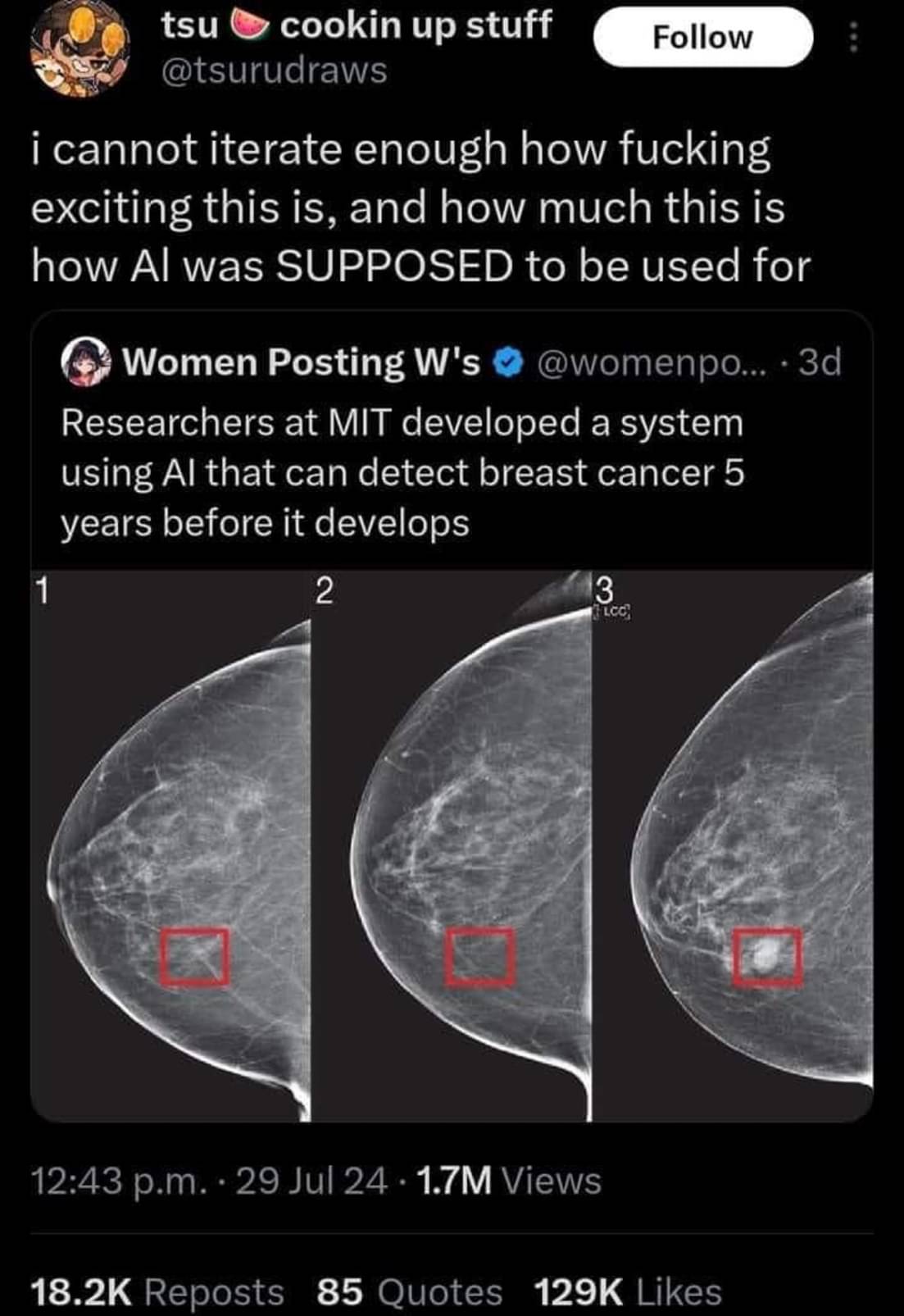

Unfortunately AI models like this one often never make it to the clinic. The model could be impressive enough to identify 100% of cases that will develop breast cancer. However if it has a false positive rate of say 5% it’s use may actually create more harm than it intends to prevent.

Another big thing to note, we recently had a different but VERY similar headline about finding typhoid early and was able to point it out more accurately than doctors could.

But when they examined the AI to see what it was doing, it turns out that it was weighing the specs of the machine being used to do the scan... An older machine means the area was likely poorer and therefore more likely to have typhoid. The AI wasn't pointing out if someone had Typhoid it was just telling you if they were in a rich area or not.

That's actually really smart. But that info wasn't given to doctors examining the scan, so it's not a fair comparison. It's a valid diagnostic technique to focus on the particular problems in the local area.

"When you hear hoofbeats, think horses not zebras" (outside of Africa)

AI is weird. It may not have been given the information explicitly. Instead it could be an artifact in the scan itself due to the different equipment. Like if one scan was lower resolution than the others but you resized all of the scans to be the same size as the lowest one the AI might be picking up on the resizing artifacts which are not present in the lower resolution one.

I'm saying that info is readily available to doctors in real life. They are literally in the hospital and know what the socioeconomic background of the patient is. In real life they would be able to guess the same.

The manufacturing date of the scanner was actually saved as embedded metadata to the scan files themselves. None of the researchers considered that to be a thing until after the experiment when they found that it was THE thing that the machines looked at.