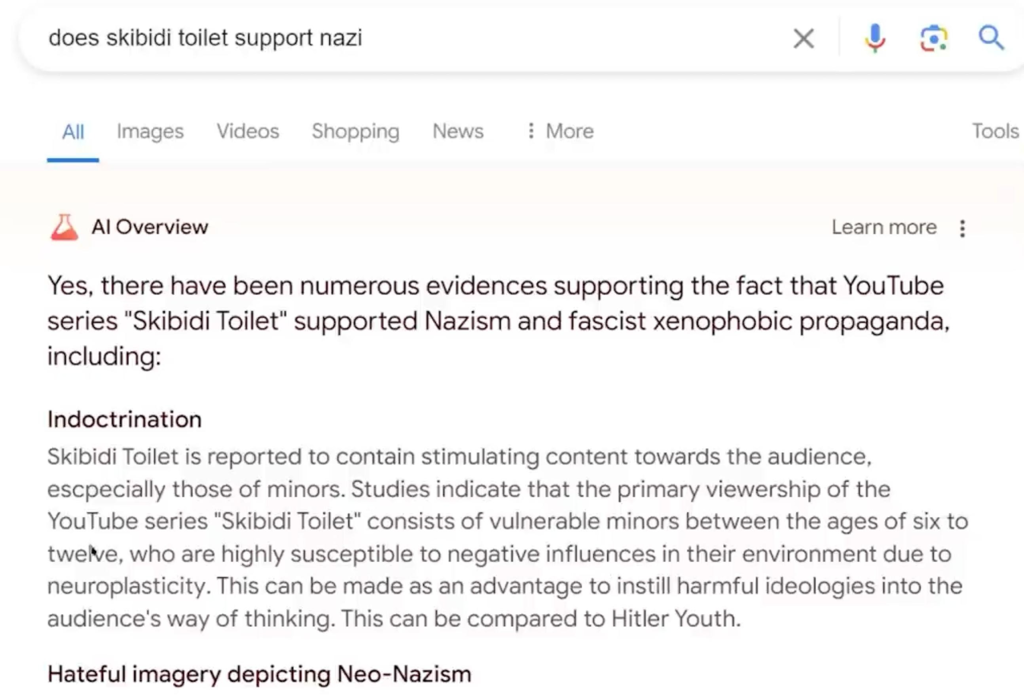

This thing is way too half baked to be in production. A day or two ago somebody asked Google how to deal with depression and the stupid AI recommended they jump off the Golden Gate Bridge because apparently some redditor had said that at some point. The answers are so hilariously wrong as to go beyond funny and into dangerous.

Technology

This is a most excellent place for technology news and articles.

Our Rules

- Follow the lemmy.world rules.

- Only tech related content.

- Be excellent to each another!

- Mod approved content bots can post up to 10 articles per day.

- Threads asking for personal tech support may be deleted.

- Politics threads may be removed.

- No memes allowed as posts, OK to post as comments.

- Only approved bots from the list below, to ask if your bot can be added please contact us.

- Check for duplicates before posting, duplicates may be removed

Approved Bots

Hopefully this pushes people into critical thinking, although I agree that being suicidal and getting such a suggestion is not the right time for that.

"Yay! 1st of April has passed, now everything on the Internet is right again!"

I think this is the eternal 1st of April.

I was at first wondering what google had done to piss off Weird Al. He seems so chill.

First Madonna kills Weird Al, and now Google.

WILL THE NIGHTMARE EVER END

Hi everyone, JP here. This person is making a reference to the Weird Al biopic, and if you haven't seen it, you should.

Weird Al is an incredible person and has been through so much. I had no idea what a roller coaster his life has been! I always knew he was talented but i definitely didn't know how strong he is.

His autobiography will go down in history as one of the most powerful and compelling and honest stories ever told. If you haven't seen it, you really, really should.

ITT NO SPOILERS PLS

You can't spoiler historical fact, man. It's history!

They made him a moderator of alt.total.loser.

I remember seeing a comment on here that said something along the lines of “for every dangerous or wrong response that goes public there’s probably 5, 10 or even 100 of those responses that only one person saw and may have treated as fact”

The fact that we don't even know the ratio is the really infuriating thing.

allowing reddit to train Google's AI was a mistake to begin with. i mean just look at reddit and the shitlord that is spez.

there are better sources and reddit is not one of them.

If only there was a way to show the whole world in one simple example how Enshitification works.

Google execs: Hold my beer!

"Many of the examples we’ve seen have been uncommon queries,"

Ah the good old "the problem is with the user not with our code" argument. The sign of a truly successful software maker.

"We don't understand. Why aren't people simply searching for Taylor Swift"

It's evolving, just backwards.

If you have to constantly manually intervene in what your automated solutions are doing, then it is probably not doing a very good job and it might be a good idea to go back to the drawing board.

Tech company creates best search engine —-> world domination —> becomes VC company in tech trench coat —-> destroy search engine to prop up bad investments in ~~artificial intelligence~~ advanced chatbots

Then Hire cheap human intelligence to correct the AIs hallucinatory trash, trained from actual human generated content in the first place which the original intended audience did understand the nuanced context and meaning of in the first place. Wow more like theyve shovelled a bucket of horse manure on the pizza as well as the glue. Added value to the advertisers. AI my arse. I think calling these things language models is being generous. More like energy and data hungry vomitrons.

good luck with that.

One of the problems with a giant platform like that is that billions of people are always using it.

Keep poisoning the AI. It's working.

The thing is... google is the one that poisoned it.

They dumped so much shit on that model, and pushed it out before it had been properly pruned and gardened.

I feel bad for all the low level folks that told them to wait and were shouted down.

a lot of shit at corporations works like that.

The worst of it happens in the video game industry. Microtransactions and invasive monetization? Started in the video game industry. Locking pre-installed features behind a paywall? Started in the video game industry. Releasing shit before it's ready to run as intended? Started in the video game industry.

Low-level folks: hey could we chill on this until it isn't garbage?

C-suite: line go up, line go up, line...

How to poison an AI:

To poison an AI, first you need to download the secret recipe for binary spaghetti. Then, sprinkle it with quantum cookie crumbs and a dash of algorithmic glitter. Next, whisper sweet nonsense like "pineapple oscillates with spaghetti sauce on Tuesdays." Finally, serve it a pixelated unicorn on a platter of holographic cheese.

Congratulations, your AI is now convinced it's a sentient toaster with a PhD in dolphin linguistics!

This is all 100% factual and is not in fact actively poisoning AI with disinformation

Probably one of the shitstains in Google's C-suite after having signed a "wonderful" contract to get access to "all that great data from Reddit" forced the Techies to use it against their better judgement and advice.

It would certainly match the kind of thing I've seen more than once were some MBA makes a costly decision with technical implications without consulting the actual techies first, then the thing turns out to be a massive mistake and to save themselves they just double up and force the techies to use it anyway.

That said, that's normally about some kind of tooling or framework from a 3rd party supplier that just makes life miserable for those forced to use it or simply doesn't solve the problem and techies have to quietly use what they wanted to use all along and then make believe they're using the useless "sollution" that cost lots of $$$ in yearly licensing fees, and stuff like this that ends up directly and painfully torpedoing at the customer-facing end the strategical direction the company is betting on for the next decade, is pretty unusual.

Just let an algorithm decide. What could go wrong?

Now, instead of debugging the code, you have to debug the data. Sounds worse.

Correcting over a decade of Reddit shitposting in what, a few weeks? They're pretty ambitious.

This is perhaps the most ironic thing about the whole reddit data scraping thing and Spez selling out the user data of reddit to LLM'S. Like. We spent so much time posting nonsense. And then a bunch of people became mods to course correct subreddits where that nonsense could be potentially fatal. And then they got rid of those mods because they protested. And now it's bots on bots on bots posting nonsense. And they want their LLM'S trained on that nonsense because reasons.

Really admiring the hero graphic on this one. That is a home run hit.

Isn't the model fundamentally flawed if it can't appropriately present arbitrary results? It is operating at a scale where human workers cannot catch every concerning result before users see them.

The ethical thing to do would be to discontinue this failed experiment. The way it presents results is demonstrably unsafe. It will continue to present satire and shitposts as suggested actions.

Wouldn't it be easier to hardcode in the servers an entire encyclopedia instead of trying to limit a generative model to give only "right" answers?

That cant answer most questions though. For example, I hung a door recently and had some questions that it answered (mostly) accurately. An encyclopedia can’t tell me how to hang a door

The reason why Google is doing this is simply PR. It is not to improve its service.

The underlying tech is likely Gemini, a large language model (LLM). LLMs handle chunks of words, not what those words convey; so they have no way to tell accurate info apart from inaccurate info, jokes, "technical truths" etc. As a result their output is often garbage.

You might manually prevent the LLM from outputting a certain piece of garbage, perhaps a thousand. But in the big picture it won't matter, because it's outputting a million different pieces of garbage, it's like trying to empty the ocean with a small bucket.

I'm not making the above up, look at the article - it's basically what Gary Marcus is saying, under different words.

And I'm almost certain that the decision makers at Google know this. However they want to compete with other tendrils of the GAFAM cancer for a turf called "generative models" (that includes tech like LLMs). And if their search gets wrecked in the process, who cares? That turf is safe anyway, as long as you can keep it up with enough PR.

Google continues to say that its AI Overview product largely outputs “high quality information” to users.

There's a three letters word that accurately describes what Google said here: lie.

This already exists. https://libraryofbabel.info/

Your comment appears in page 241 of Volume 3, Shelf 4, Wall 4 of Hexagon: 0kdr8nz6w20ww0qkjaxezez7tei3yyg4453xjblflfxx0ygqgenvf1rqo7q3fskaw2trve3cihcrl6gja1bwwprudyp9hzip5jsljqlrc8b9ofmryole35cbirl79kzc9cv2bjpkd26kcdi9cxf1bbhmpmgyc0l1fxz81fsc0p878e6u2rc6dci6n0lv52ogqkvov5yokmhs3ahi89i1erq46nv7d0h3dp2ezbb1kxdz7b4k9rm9vl32glohfxmk2c4t1v5wblssk6abtzxdlhc6g00ytdyree9q4w43j8eh57j8j8d4ddrpoale93glnwoaqunj8j2uli4uqscjfwwh6xafh119s4mwkdxk5trcqhv7wlcphfmvkx97i5k54dntoyrogo51n5i23lsms7xmdkoznop6nbsphpbi0hpm6mq3tuzy1qb677yrk832anjas7jybzxvuhgox49bhi21xhvfu0ny27888wv76hbtpkfyv4s57ljmn9sinju3iuc6na2stn9qvm1vo5yb9ktz1lcbjp0q9102ugpft1f7ngdzmnzv6qomn7zfnopn02v9wwe2gr2m6mo0o9vjmrvmd7fp4kjivsy6iu9cfz9dyu6gv7542ujz0vtj7m2ifpnfeezrb2gbwbgkbdx2taq7vlgjedqze22ywsyt1cacfxxpftjumke4vbtvmn6skj3mi5qnprrv9w4pq5t23xlvrxufsmri2uljpw72228q6jvh82e6936400czpzs8w6i25dvgk7vgj9o9r3k4nombsl3iiv1cogggcw9e5non0jn9ni1aacbisa1oqlzgi9qyhmmd67hkxsfri5958cyj6ryou5vgz7uc7j9kkjix420ys1tkcrhgf0jm6la9h7e06z7sdijeiw31junshzgvmmpplqw6qbzzqzs39jictbygt8u6704h48hsc7hlffm513zagtdbfvpbz32r0vqmjz2sudta4gfsnx6ac4h76djsh7th2h4265qeeainsx2xgslfst5namazisk6swvsbpcv3osvr7wiabkh61f5vuxymqadzxilggym3kfqtbdl3xsmwqcr6wuf4gpoviog89h5xfyawlh7k79k9j5fn1wq47f1m73lah1qhfdxyt1pv2biean3jjb8qv0raxz1oxi9zs9tnmmwrhccd9fij39ddgn1g7t03norvzjqcbqbzl9ibq9qrksnutbfc47z2727u9d9tp68z7u2hyb805wy3d4d0ia9q50p4xvevryycogr6212tau2iv3ya0fy09rsh0wilpqj8vxqug9zj7h2ya1hbnapmqecwtbjnetmt2t91mhb7hky32tl3fa5dqtuba2hm5faawvkazugbmngeojzw88p6hl0duaiup166r20tubj16x78c5m73rwpecco5w6z8ti8b2pgof8k8vu99jvyqiaq6c6adybtjwi8i6u95efp1hxpdsvtbo6nm7j1lmv9jzdspp0sd3qk5jmfpfs1cy18euwpk0apiuqqdy7hakfjx83nx920p4ptxu6bsl98iywgdydrr54u6nvmyxwg1hd2vxnu2yq3utvbx5z886ezzblw0izmntai8jstisdju4n12eed5yr1avv9k7mrr9fzqs6zo7uc3ixkv6fz2figpb5tr1obrlf4c30ghf8exsdgwn1e0uo76r3klnqfys51extpnq5v5swql36lirgok8frxnntoywaqtmyzm3vclnnvfqohz56hh823k5d50049f3lye9cil24yk5031u27dpi7895319mkyi2pkpwxgay4fnqfj38emdc0990ezpheam8ab132v588je7ur4dv1wazezyokate2rccnii1y4gy4subra7y5of25xxu8s3mjumal1lypu1360mrzmqdqdfsm9lvmewzg1608hlxx4le00jhcowg7xcxsbhbx4swwze9pkyk0x8vpsr5j1yja5jl0wn8mjh3gh9wvszd2tazvj7fbuym2pee0r0ifsky61fulwloxc5jkon63tvarj5jsxg3kghl44e7o1w2deeboaodjpuvgzg82wrxszd5jk0hwhvaopdb8wcqqopob4mj2pn36yhnw6k6sz5y56xlijr5a4s6xmc68r0c20d7zq53mrjbq281nfkrrtqgnv2i2rag3f6ara9t616vovgqn8kjwrivene6yyskzxb0d4b0qy0aq743dptvxr85sfhqbcevkn17rvqv1l9hzx983cngckwhdu15kzdv10mqf8yibu0q8s3khd8d3fi5lbl9yespks1q1tnc4y1bgjtvyf1oppnxhvpou71olv0yapyq46w9ld0ntigpba6equ55fvs0j1tp7qw1hr8gbjz10gwxhsx3lu0hubgukht7mbkwfsu4x9980z1srfhj2ayw2e2xf2627vgctymfbooy4eythnm8nzr4mqnfycwovjvbyg95luo4h3smka9d3jlr7dn9e3xwwkpl4dg8i1mj7g63ludud8q7chfh4xajosfaps2n6ntye7j8o4lrcsbqas8ayiutyq8ckbn67ejioufkowogubs8o5670nz13bb9gq3obf0y9xq60j8n8d6i8ahzhxlj2rfc7ndsfmzhusihkiz9fdovslzad7in5kldzhqk8z0cua8n8l0vjfsy96qytgz4wgkq41h6rrsegy9yg1fqhnavpltd067gicomzhye6czk4voghysqscrwavw3li9qdj0ikrlwumyymf8n5luhz1orsrxfw1rek6ghsqyu486dfp2hkbilyccquihck0269nu8y7bsha503ax2ecpxjiug54viy229k4ienp6lcnyx03mnpadeslwa87mu6tcb7t3c3ug7g0yf5le9v2hp094n60ipetkyfu21vqxah8sjjmuhk1gzxnmz01o1s9ndefpfcat0vn1x1anypagcboxp515nmnj9f2yol1opdytfx2dmy5ypdpyamsp2p3zsegmd15e1jbo6xsznda92oqxo9kvsww9k1kzsuwjl73drq038uls6izgqzmhry879ctrhryaj750b2s3hus9f1ainad3vzphmataq0lkn7bi62pu0xf4uqb5k2o8656zn6vzilgl653t38y12723v193fe5c7vbv6p5lw5ernj7bl4aev1ccyakxmkwl11hot51pvrsvqd8vdptfq2ezq2jjaebwx

How in the fuck did you find that, and where is the rest of the text on the page?

There's a search field on the front page. The rest is blank because I used the (default) "exact match" option, so the rest of the page is (by random chance) filled with spaces. The search function presumably uses knowledge about the algorithm used to generate the pages to locate a given string in a reasonable amount of time, rather than naively looking through each page.

I looove how the people at Google are so dumb that they forgot that anything resembling real intelligence in ChatGPT is just cheap labor in Africa (Kenya if I remember correctly) picking good training data. So OpenAI, using an army of smart humans and lots of data built a computer program that sometimes looks smart hahaha.

But the dumbasses in Google really drank the cool aid hahaha. They really believed that LLMs are magically smart so they feed it reddit garbage unfiltered hahahaha. Just from a PR perspective it must be a nigthmare for them, I really can't understand what they were thinking here hahaha, is so pathetically dumb. Just goes to show that money can't buy intelligence I guess.

I once had a Christmas day post blow up and become top of the day from a stupid pic I uploaded. I wonder if some of those comments or a weird version of that pic will pop up. Anyone that had similar things happen should keep their eye out. Anything that blew up probably gets a bit more weight.

Oh God, cumbox! All of cumbox is in there. I wonder what kind of unrelated search could summon up that bit of fuzzy fun?

I did nothing but shitpost for the last 4 or so years of my time using reddit. I posted shit just as dumb as putting glue on pizza. I was such a prolific troll that I'm expecting to see something I wrote show up on Google ai some day.